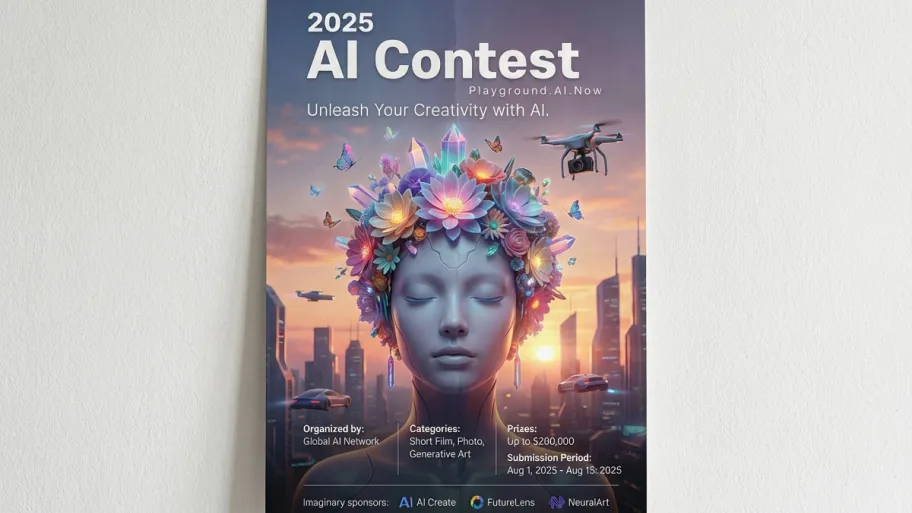

Across contests, platforms, and investigation/verification sites, we provide evidence of whether content is AI-generated or altered—along with manipulation traces and provenance signals.

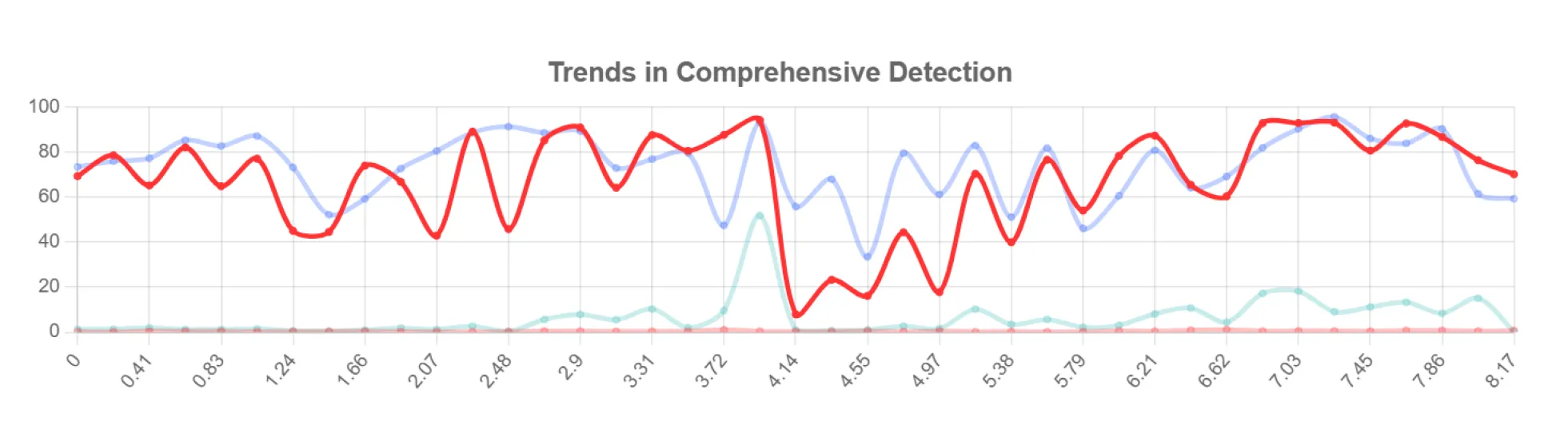

Achieves 90%+ detection accuracy across major deepfake types, including face swaps, lip-sync, and generative AI manipulation.

Flags suspicious segments at the second/segment level, enabling fast and evidence-based verification even under high-volume requests.

Continuously upgraded using KoDF, a large-scale deepfake dataset, to strengthen performance in real-world conditions.

From technology updates to public and institutional collaborations and generative-AI detection applications,

we’ve gathered the latest news and use cases centered on real-world examples.

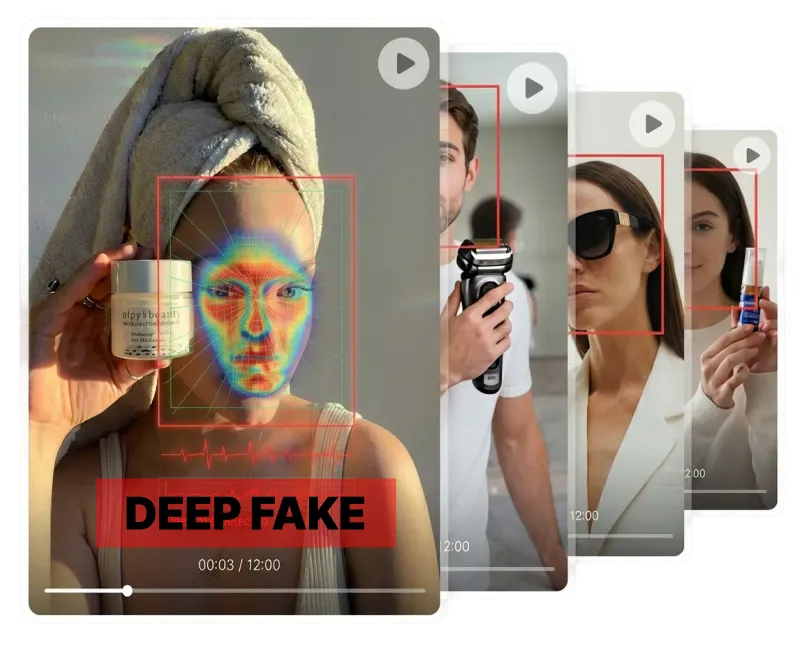

A deepfake is synthetic media created or manipulated by AI to make videos, audio, or images look real. It includes face swaps, lip-sync deepfakes, generative AI edits, and voice cloning. Deepfakes can cause serious trust and security harm, including deepfake porn, impersonation, and misinformation.

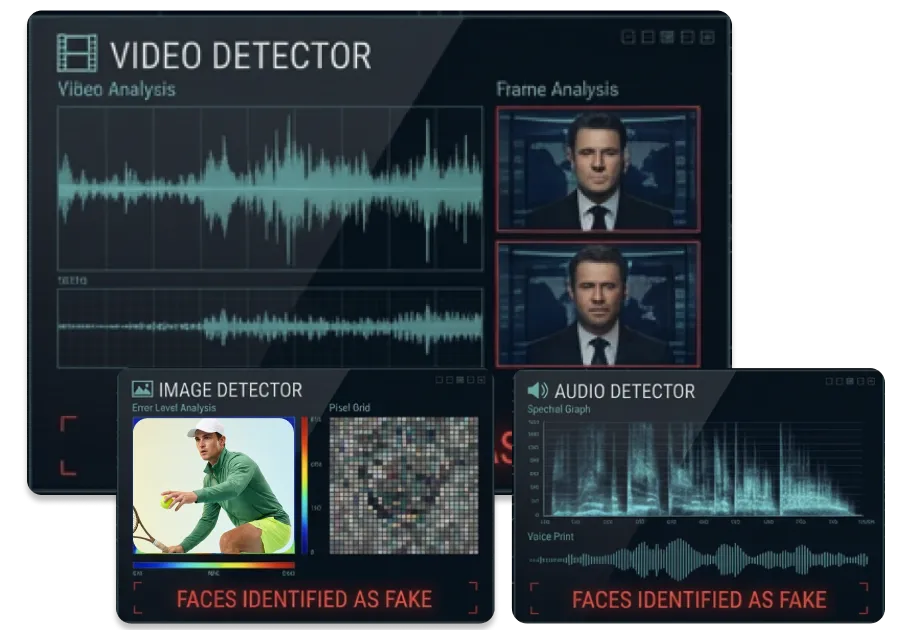

AI Detector analyzes video, audio, and images together to identify deepfake content. It detects major types such as face swaps, lip-sync deepfakes, generative AI manipulation, and voice impersonation. This is especially useful for person-targeted cases like kpop deepfake incidents.

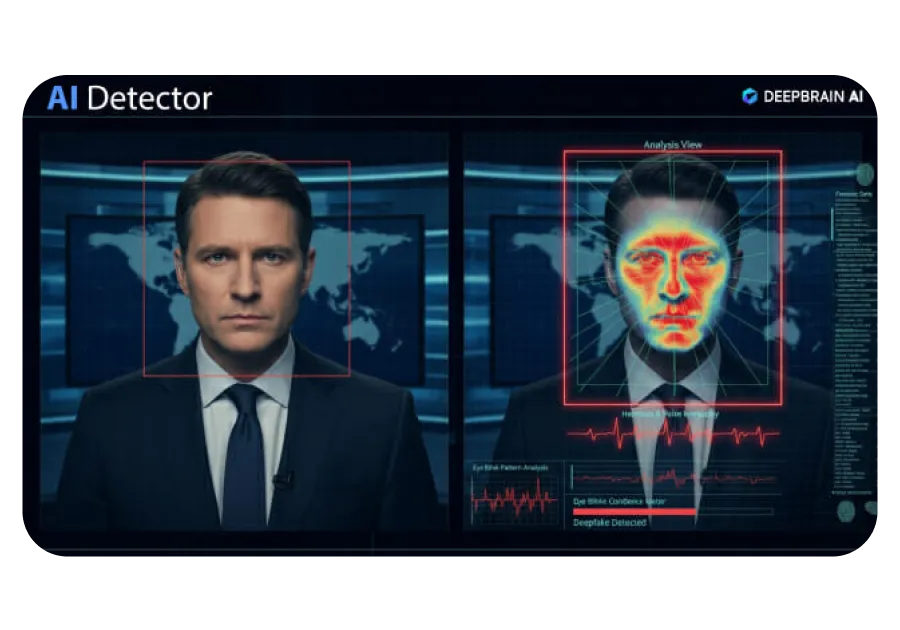

AI Detector uses a multi-step AI pipeline to find manipulation traces and determine authenticity.

It combines machine learning/deep learning pattern detection, original/reference comparison, and fine-grained face/voice feature analysis.

Suspicious segments are marked at the segment level to support evidence-based review.

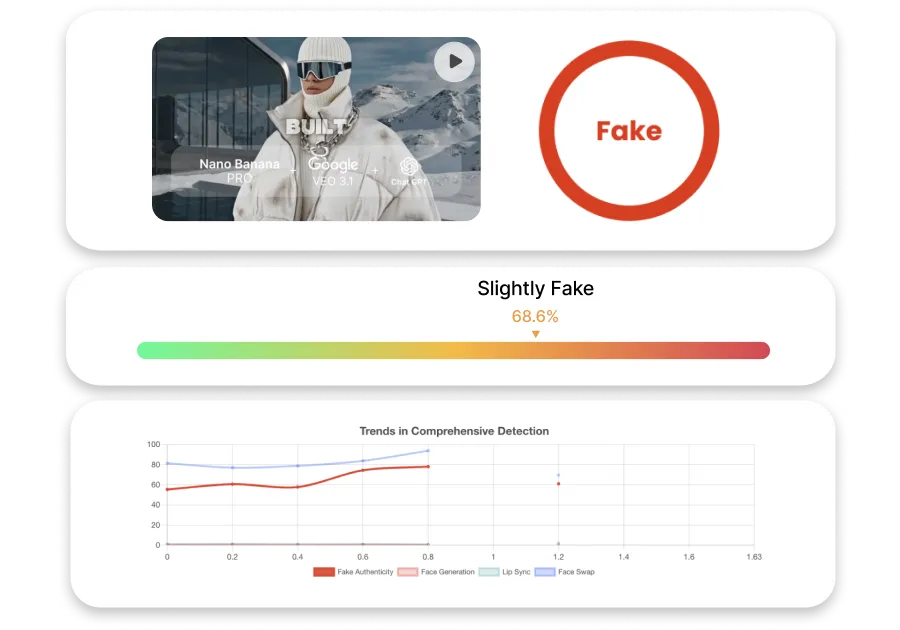

AI Detector provides authenticity results along with an evidence report.

The report includes suspicious-segment timelines, manipulation types, and analysis scores needed for verification.

It is formatted for immediate use in investigations, internal reviews, and risk response.

AI Detector currently achieves 90%+ detection accuracy. It is continuously upgraded to maintain stable performance across major deepfake types. Accuracy improves over time through ongoing data and model updates.

AI Detector typically delivers authenticity results within minutes. It quickly processes video, image, and audio to reduce investigation and verification turnaround time. Suspicious segments are highlighted at the second/segment level for fast inspection.

AI Detector currently focuses on rapid detection and evidence delivery rather than pre-blocking.

Instead of auto-removing content, it provides clear evidence so responsible parties can take action.

Operational policies and workflow integration can be discussed depending on deployment needs.

Yes. AI Detector can be provided with API/integration options for enterprise and institutional environments.

It fits into existing verification, monitoring, or security workflows.

Integration scope and method are aligned during the onboarding process.

AI Detector operates in accordance with customer security and privacy policies.

Data processing and retention standards can be adjusted to meet environment requirements.

Enterprise-grade security controls are available for institutional deployments.

Submit your request via the [1-Month Free Support Application] button on this page. After reviewing your purpose and the scope of analysis, our representative will contact you with next steps. We support verification requests across public institutions, education, and corporate environments.